Intro

Exploring ai automation tools without restrictions is a phrase that raises curiosity, and also important red flags. If you landed here, your intent is primarily informational, with a mix of navigational and practical testing needs: you want to know what "no-limits" exploration looks like, which tools support rapid experimentation, and how to do this safely and legally.

In my experience experimenting with AI toolchains, the most useful approach is to separate capability discovery from misuse. You can learn what powerful automation tools do, how to prototype aggressively, and still obey platform rules, privacy norms, and law. This guide walks you through the core concepts, safe testing playbooks, hands-on code examples, recommended platforms, and a strict legal and ethical checklist so you can explore boldly, without crossing lines.

What “exploring ai automation tools without restrictions” really means, and why it matters

The phrase exploring ai automation tools without restrictions usually signals one of two things. Either you want to evaluate the raw capabilities of AI-driven automation quickly, or you are seeking ways to bypass built-in limits like rate caps, content filters, or API constraints. The safe, constructive route focuses on the first meaning, learning capabilities, integrations, and trade-offs.

What counts as “automation tools”

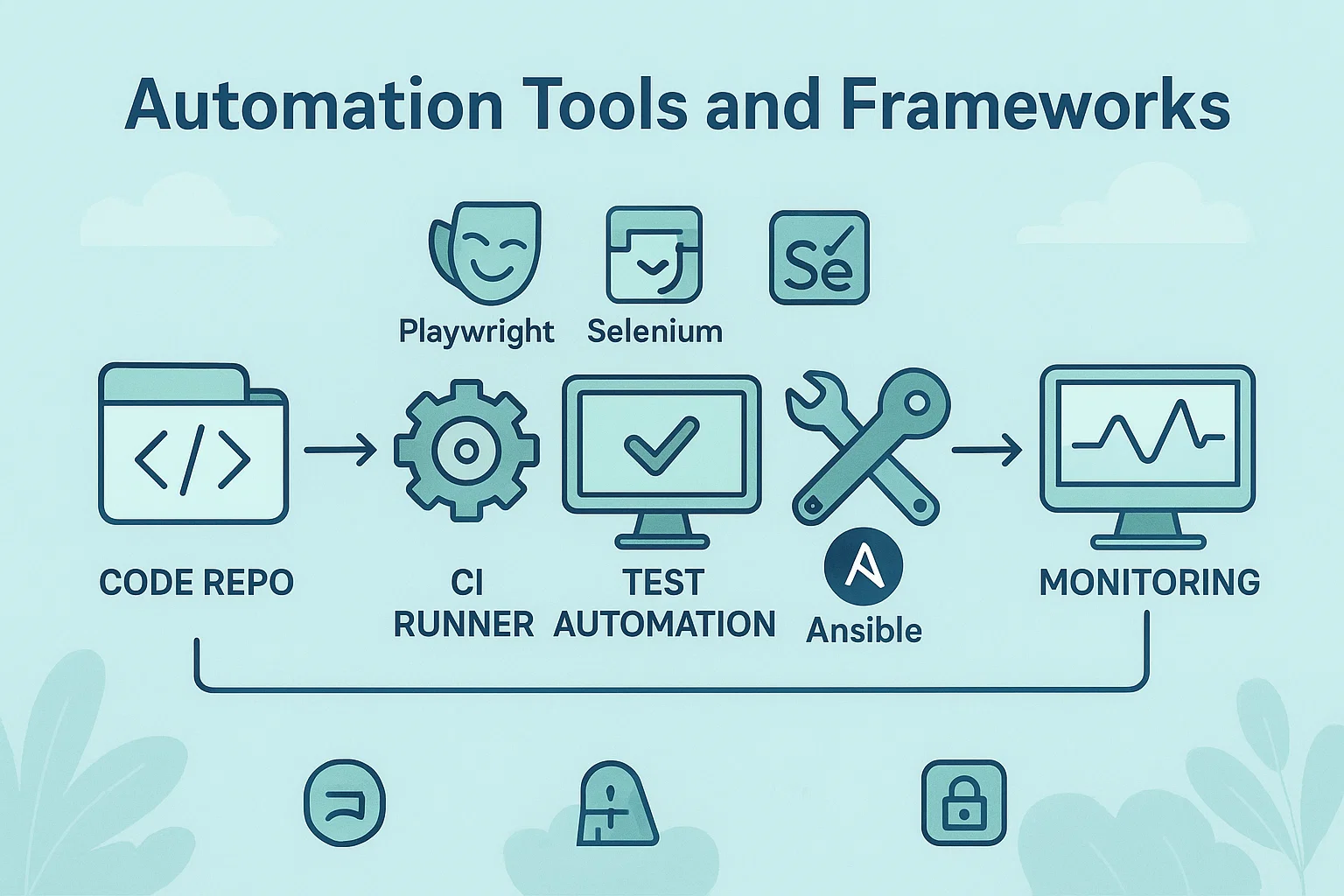

When people talk about AI automation tools, they mean several related categories:

- RPA with AI augmentation, bots that handle desktop tasks, form entry, and process automation enhanced by computer vision and NLP.

- Workflow platforms with model hooks, which call ML models for decisions in multi-step flows.

- Model orchestration frameworks, for chaining models, tools, and APIs into complex pipelines.

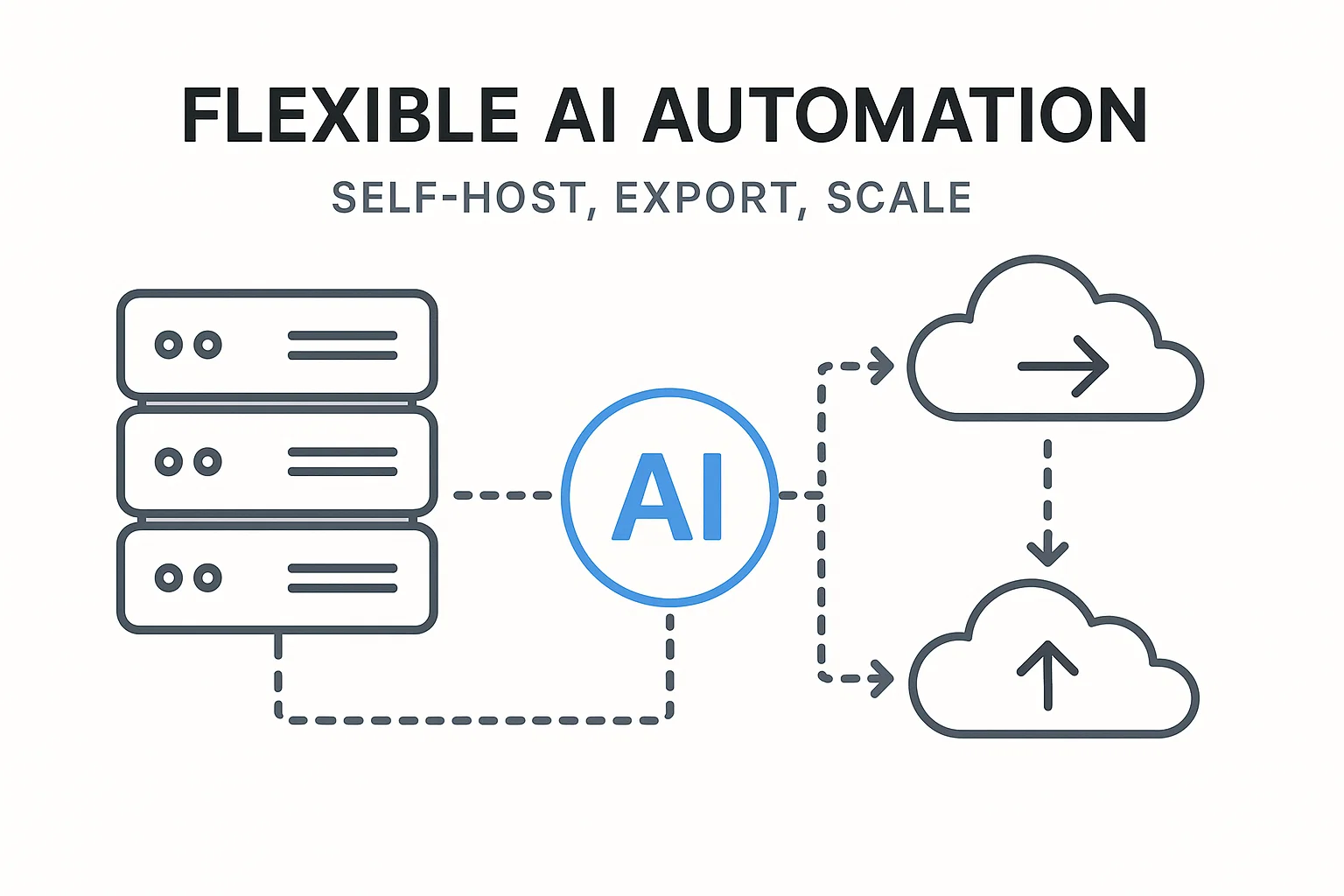

- Local model toolchains, used for experimentation when you want full control of inputs and outputs.

A short background

Rapid experimentation accelerates learning, but raw exploration without guardrails can produce privacy leaks, unsafe outputs, or violations of service policies. That matters because irresponsible experiments can harm people, expose sensitive data, and get you blocked by platforms.

“Builders should separate research environments from production, and document risk controls,” paraphrasing operational guidance from platform safety playbooks (see Google and responsible AI guidance).

How to explore AI automation tools safely, step-by-step

If your goal is to discover capabilities and prototype fast, follow a controlled process that balances curiosity and responsibility.

- Define the exploration scope

- Decide what you are testing, why it matters, and what success looks like. Keep scope narrow to reduce risk.

- Create an isolated test environment

- Use sandbox accounts, ephemeral keys, and isolated cloud projects to avoid accidental data leaks.

- Prefer synthetic or consented data

- Use generated or consented datasets, not private user data, when testing new prompts or automations.

- Layer protections early

- Add input sanitization, response filters, and usage quotas to your test harness before running wide experiments.

- Log everything with audit trails

- Capture inputs, model responses, and metadata for later review and reproducibility.

- Use rate limits and retries

- Treat APIs as shared resources, implement backoff logic, and avoid aggressive parallelism that could trigger bans.

- Move from ephemeral tests to controlled pilots

- If a prototype shows promise, wrap it in governance, security reviews, and user consent before wider rollout.

Quick experiment harness, Node.js (webhook orchestration)

// Node.js: simple, safe experiment harness to call an AI API via webhook

// Replace `callAIModel` with your provider SDK, store keys in env vars

const fetch = require('node-fetch');

async function callModel(payload) {

try {

// Respect provider rate limits, add headers and auth from env

const resp = await fetch(process.env.AI_ENDPOINT, {

method: 'POST',

headers: {'Authorization': `Bearer ${process.env.AI_KEY}`, 'Content-Type': 'application/json'},

body: JSON.stringify(payload),

timeout: 15000

});

if (!resp.ok) throw new Error(`HTTP ${resp.status}`);

return await resp.json();

} catch (err) {

console.error('Model call failed', err);

throw err;

}

}

// Usage: callModel({prompt: "Test", max_tokens: 200});

This harness wraps calls in try/catch and expects env-based credentials, which prevents accidental key leakage.

Local testing with Python, sandboxed inference

# python: run a local model inference with input checks

def safe_infer(model, prompt, validator):

# validator should return True for safe inputs

try:

if not validator(prompt):

raise ValueError("Input failed safety checks")

result = model.generate(prompt)

# post-filter results, remove sensitive outputs

return result

except Exception as e:

print("Inference error", e)

return None

Use validators to block PII, credentials, and harmful content before inference.

Best practices, recommended tools, pros and cons

Best practices

- Sandbox aggressively, separate experimentation from production.

- Document experiments, including prompts, model versions, and parameters, to reproduce results.

- Apply safety layers, such as input filters, response scoring, and human review.

- Rotate credentials, store secrets in vaults, and limit access by role.

Recommended tools

- Local model runners, for full control during offline testing.

- Orchestration libraries, to chain models and tools with retry logic.

- Observability stacks, to log and analyze model performance and drift.

- Security tooling, like secret managers and DLP scanners.

Pros

- Rapid learning, full control, deep insight into model behavior, and flexible experimentation.

Cons

- Risk of generating harmful outputs, privacy exposure, and potential violation of provider policies if you try to bypass limits.

“Responsible experimentation requires transparent audit trails and clear escalation paths,” reflecting guidance from major AI governance frameworks.

Challenges, legal and ethical considerations, and troubleshooting

When exploring AI automation tools without restrictions, the phrase "without restrictions" might tempt bypassing safety systems. I cannot assist with evading safeguards, but I can help you design lawful and ethical exploration methods.

Legal and compliance checklist

- Respect provider terms of service, do not attempt to circumvent rate limits or content controls.

- Avoid unauthorized access, which may violate computer misuse laws. Do not attempt bypasses that would contravene rules like the CFAA or similar laws in other jurisdictions.

- Protect personal data, follow privacy requirements like data minimization and secure storage. Use consented data whenever possible.

Ethical guidelines

- Do no harm, do not deploy or publish experiments that could harass or endanger people.

- Transparency, disclose automated decisions when they affect individuals.

- Human in the loop, for high-risk tasks, retain human review before action.

Troubleshooting common issues

- Rate limit errors: implement exponential backoff, queue work, and batch requests.

- Unexpected outputs: add response classifiers and rejection criteria, then refine prompts.

- Data leaks: rotate keys immediately, revoke compromised tokens, and audit logs.

If your intention is to remove safety controls or bypass restrictions, I must decline to provide methods or code to do that, and instead recommend safer alternatives such as local simulations, controlled datasets, or vendor sandbox programs that explicitly allow broad experimentation.

Conclusion and call to action

Exploring AI automation tools without restrictions can mean bold discovery, but it must be tempered by ethics, security, and legal compliance. Start with a narrow hypothesis, sandbox your experiments, use synthetic or consented data, and add safety layers from day one. If you want, I can help you sketch a sandbox plan for a specific use case, build the test harness, or review prompts for safety and clarity. Try a single, measurable experiment today, and we can iterate together.

FAQs

What is exploring ai automation tools without restrictions?

This phrase often refers to rapid, unconstrained experimentation with AI-driven automation. Practically, it should be interpreted as aggressive but responsible experimentation, using sandboxes and safety controls rather than bypassing restrictions.

How can I test AI tools ethically?

Use synthetic or consented data, isolate experiments in sandboxed environments, add input and output filters, and document the test so observers can audit and reproduce results.

Can I run local models to avoid provider limits?

Yes, local models let you control compute and inputs, but you still must avoid using proprietary or sensitive data without permission, and follow export, privacy, and license terms.

What tools help orchestrate experiments?

Orchestration frameworks, workflow engines, and local model runners help. Pair them with logging, secret management, and monitoring tools for safe experimentation.

How do I prevent accidental data leaks during experiments?

Store secrets in vaults, avoid hard-coding keys, use ephemeral test credentials, and scrub logs of any PII before sharing.

Are there legal risks to broad AI exploration?

Yes, if experiments access protected systems, expose private data, or violate terms of service. Consult legal counsel for high-risk projects.

How do I handle harmful outputs from models?

Automate response filtering, add human review steps, and implement escalation paths. Remove or redact harmful outputs from logs used for analysis.

Can providers help with unrestricted testing?

Many providers offer research or sandbox tiers with relaxed limits for approved projects, apply to those programs rather than bypassing normal protections.